Measurements were made with setup shown on the figure below. The system was similar to what is planned

to be used in the Inner Detector RADMON except that Kiethley 220 + switching matrix was used as the current source

instead of ELMB DAC.

The aim of the measurements was to determine the sensitivity of the system, especially of RADFETs.

Sensors were read out in certain time intervals. After about 150 hours the RMSB was exposed

to a 22Na source. The dose rate at the RMSB, measured with Berthold monitor, was about 20 mSv/h.

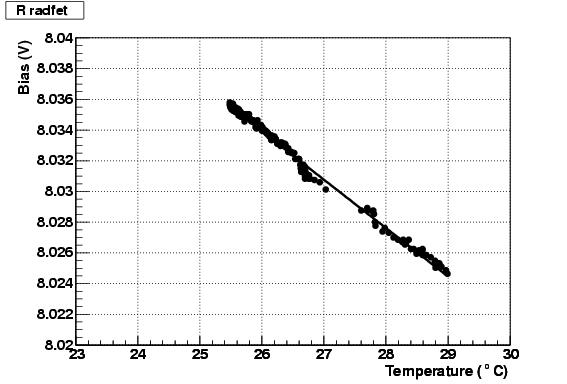

Gate voltage at drain current of I = 160 mA as a function of temperature. Linear function was fit to the data

and the result of the fit were used to correct the temperature dependence of measurements.

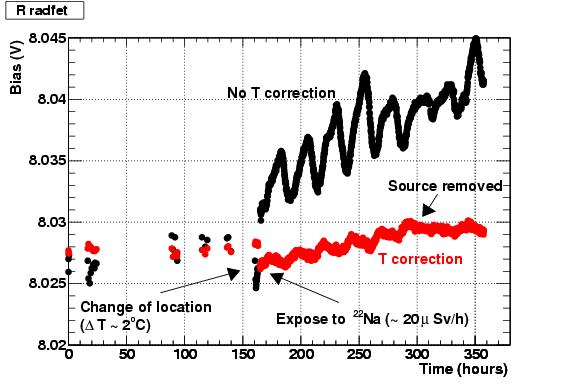

Gate voltage at drain current of I = 160 mA as a function of time. After about 150 hours RMSB

was esxposed to radiation from a 22Na source. At the same time the location of the RMSB on the bench

was changed and the frequency of measurements was increased (due to automatization).

Black points are raw measurements (without temperature correction) and one can clearly

see the day-night temperature oscillations. Because the temperature correction is not perfect,

residual oscillations are seen also after the correction. Still, the rise of gate voltage after exposure

to radiation is clearly seen, as well as the change of slope after the source was removed.

It can be estimated that the we are sensitive to the change of gate voltage of ~ 2 mV (which

corresponds to the dose of about 1 mGy with these RADFETs).

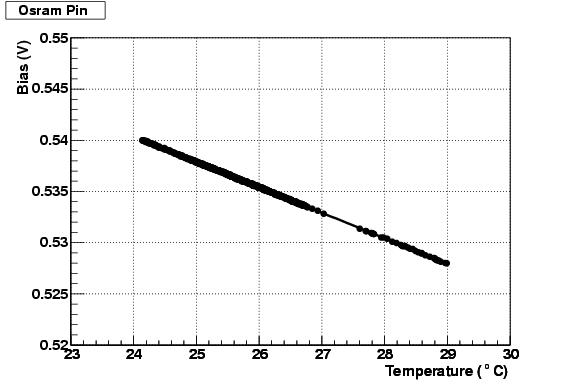

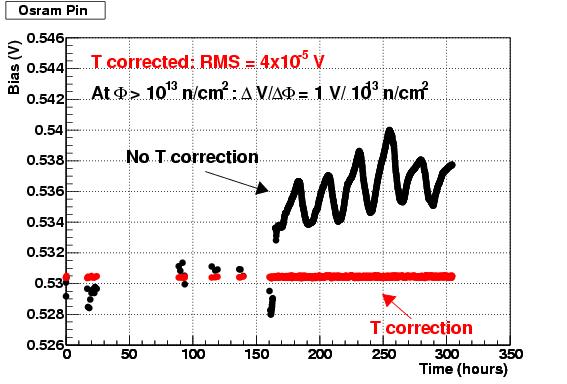

Similar plots can be seen also for measurements of Osram BPW34F pin diodes.

Bias voltage at 1 mA of forward current is measured.

Bias voltage as the function of temperature. Linear function is fit into data and results

used for T correction.

Bias voltage as a function of time. After temperature correction RMS of measured voltages

is 4x10-5 V.